Time is money, and we cannot deny that automated tools such as robots can be very helpful, saving hours of work at simple tasks and ensuring nearly 24/7 support coverage. And that holds true for automated chat service, which is becoming increasingly popular among banks, financial institutions and e-commerce. But, with the popularization of chatbots, cybercriminals have found yet another way to steal data and deceive consumers.

Phishing during live chats? Is that what they’re doing?

Not exactly. Those interactions, with their pre-programmed responses and options for routing the user, require identification data. Chatbots request the information so politely and professionally, just as a bank manager would, right?

Since chatbots are being widely used by e-commerce companies as well as banks and financial institutions, the data requested often begins with account numbers, address, social security number and everything connected with the account records. That information alone is very valuable, and is regularly sold on the deep and dark web.

Scams often request sensitive data such as credentials (email and passwords) or credit card numbers. Sometimes the bot sends the user to an external page where it captures the information in the same way as traditional phishing.

In the majority of cases we’ve seen here at Axur, however, the requests are confined to personal data. As we mentioned above, that data becomes an item that enables criminals to open dummy accounts and initiate scams. So, always keep your eyes open, and remember that wise old aunt of yours who was afraid to give out information!

In more sophisticated cases, it’s possible that the information collected will be used to launch a spear phishing attack, which is the well-aimed capture of an individual’s sensitive data, or that of other targets within a single company. How? With the volume of leaked data available on the web, a criminal can initiate the scam by showing the user that he knows his email, name, social security number, and income, which suggests legitimacy. That makes it possible to convince the user to deliver sums of money.

Let’s see some examples

Still not sure how all that data capture happens? Let’s consider a concrete example, in which a large bank from Brazil is affected:

"Hello, I’m your Virtual Assistant. What’s your name?"

"Enter your name here" / "Begin"

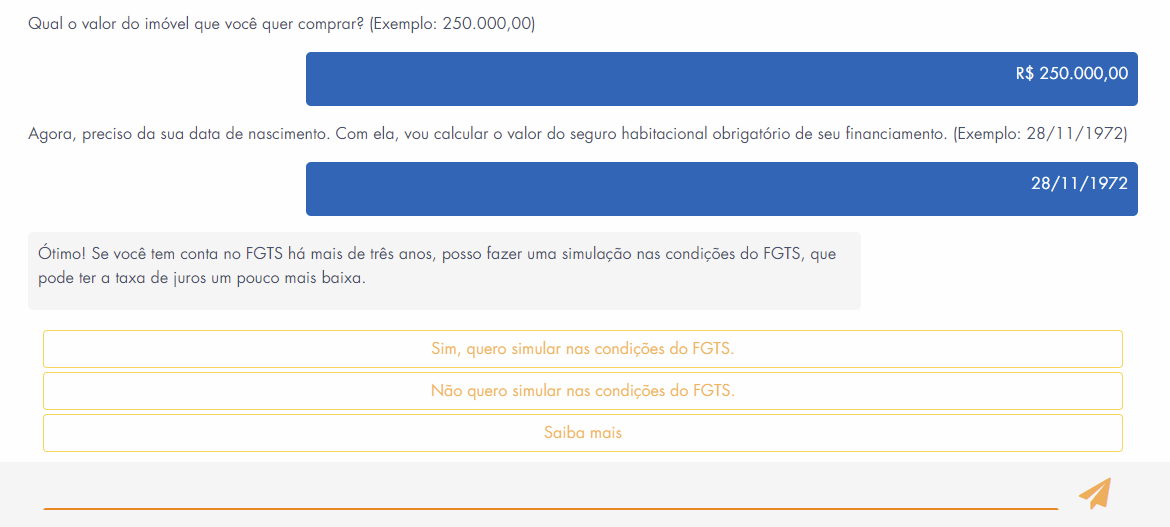

Right after that initial screen, a bit of data is requested for a particular possible action—in this case, a simulation of a real estate purchase. Continuing in the conversation with some invented data, we see that the information requested includes birth date and information about unemployment insurance.

"What is the price on the real estate you would like to purchase?" / "$250,000.00)"

"Now I need your birthdate. With that, I will calculate the home insurance required for your financing." / "11/28/1972"

"Great! If you have had unemployment insurance for more than three years, I can replicate the policy’s conditions, which may offer a lower interest rate."

"Yes, I would like to replicate the conditions of my unemployment insurance."

"No, I do not want to replicate the conditions of my unemployment insurance."

"Find out more"

Where does it happen, and how can it be identified?

In theory, we would all like to know how to recognize a trap set for data collection by analyzing what is in a strange environment, or by verifying that the sender is not trustworthy. However, in real life we know that’s not exactly how it happens. We have gleaned a few characteristics that are generally common to these scams:

URL: always be suspicious

This is tip number one for fending off any kind of phishing attack—or even larceny. In addition to containing strange elements such as “justforyou,” phishing attacks sometimes use cybersquatting practices. One strategic point in these attacks is the use of Unicode, which includes letters that appear similar (homoglyphs) but are from different alphabets (such as the Latin “a” and the Cyrillic “a”), positioned to trick those who believe they are accessing a legitimate site.

Very directed and limited questions

The example we gave above is very characteristic of the “construction” limitations of this type of site. The questions are generally very directed and limited (and may even come to a point where the process “locks up”).

Back to the example of real estate financing. Fraud sharing can occur through a sponsored post on social media. This kind of publication is normally aimed at people with “desirable” characteristics—those who may therefore be interested in what’s being offered.

Beware of other related dangers

Proactively monitoring the use of your brand in digital scams is becoming critical as companies come to understand that they are responsible for protecting their customers throughout their digital travels.

As we’ve mentioned, these scams can be very sophisticated, involving fake social media profiles or malicious sites to support the attacks. Whatever the possibilities, being alert to these digital risks and providing adequate protection can prevent such dangers from staying online for a long time (in some cases, months!) and from damaging your brand’s credibility and your digital presence.

Right now, Axur has thousands of intelligent robots (these are real friends!) available to help you out. Find out about our solution for Brand misuses and get more details about how it’s possible to have a digital presence that’s free from this kind of noise.

A journalist working as Content Creator at Axur, in charge of Deep Space and press activities. I have also analyzed lots of data and frauds here as a Brand Protection team member. Summing up: working with technology, information and knowledge together is one of my biggest passions!